Within the lifecycle of any project, especially in the dynamic realm of open-source, each potential addition requires iteration, testing, and critical dialogue. While writing the code for a contribution is a significant step, ensuring it meets user needs, functions correctly, and integrates seamlessly necessitates robust methods for user testing and a continuous loop of community feedback. There are several methods for testing contributions and various strategies for communicating with the community, both during development and when sharing the final outcome.

Before my contribution to Jellify even reached the broader community, I made sure to thoroughly test any changes I implemented. This involved not just verifying that the code ran, but also assessing how it interacted with other parts of the application. In many open-source environments, having someone else review your work can be invaluable. They might spot issues or suggest improvements from a different perspective before a pull request (PR) is even created.

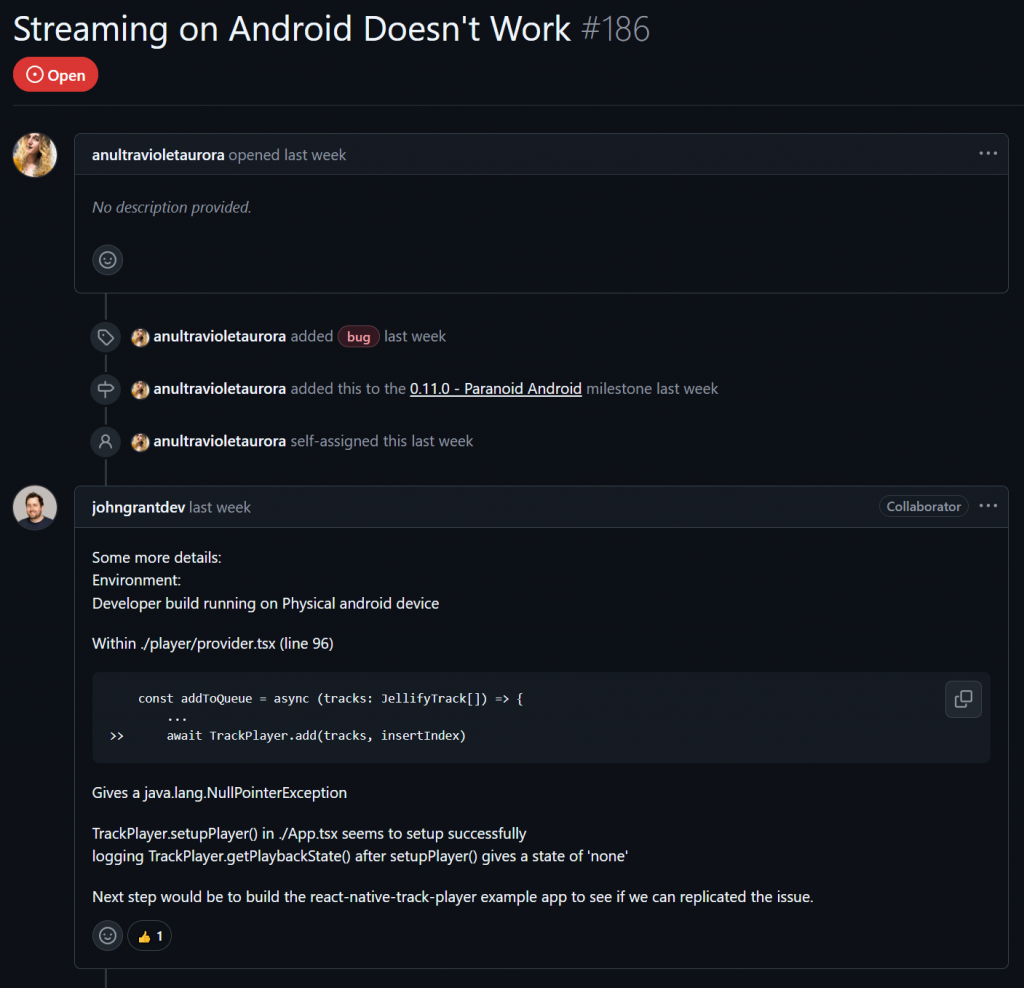

The PR itself serves as another critical feedback mechanism. When I submitted my changes to Jellify, the PR was more than just an attempt to merge my code; it was an invitation for review. Maintainers and other interested community members could scrutinize the code, test the associated branch, and provide direct comments on specific lines or overall functionality. If it had been a larger feature, creating a draft PR early on, even with incomplete code, would have been an effective way to signal work in progress and invite early, systematic feedback. This is where detailed code reviews, automated checks, and manual testing by maintainers come into play, directly shaping the final contribution. In my case, I was only able to test my changes using the Android emulator on my machine. Consequently, the project maintainer had to verify that the changes didn’t cause any problems on the Apple platform. Communication during this phase was key; the maintainer provided feedback directly on the PR, confirming the changes worked as expected on Apple before merging. This clear communication ensured everyone was on the same page. Since no issues arose, they were able to merge the code with the main codebase, making it available to everyone else working on the project.

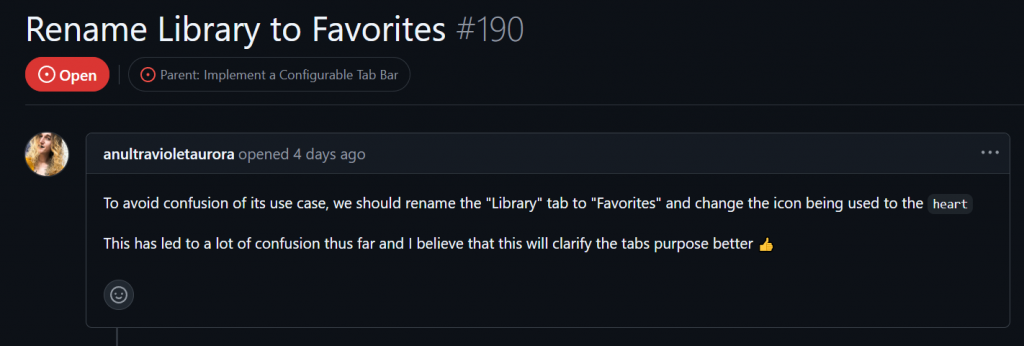

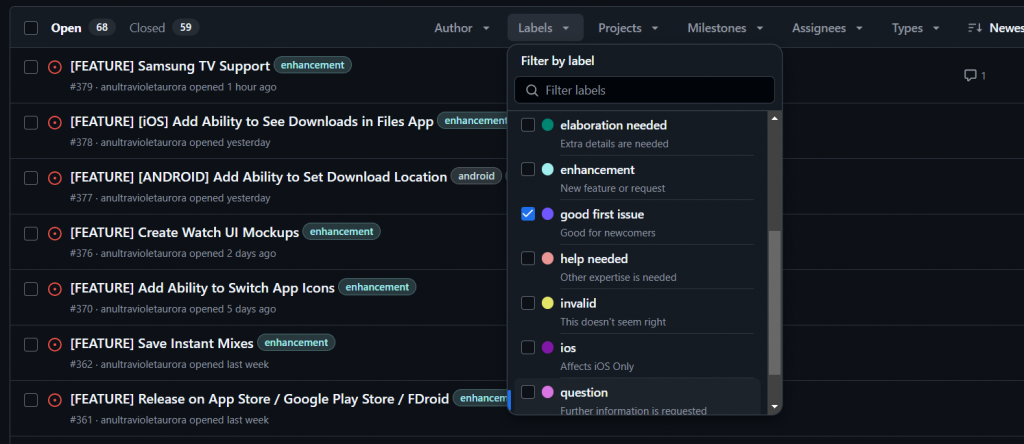

In addition to this “on-the-fly” testing during development, there is also formal user testing, such as alpha and beta releases. Jellify utilized this by releasing both stable and unstable builds for its community to try and test, typically announcing these releases and soliciting feedback through their Discord server. As the app was still in its early stages, all testing was conducted in the alpha phase, where the project’s core functionality was available, but most other features were not yet ready or hadn’t even been started. By releasing the app to a wider audience of over 100 users and contributors, any new problems or suggestions for the app could be discovered quickly, facilitating the rapid formation of potential bug fixes and feature enhancements.

Ultimately, user testing and community feedback are not merely steps in a process; they are the lifeblood of a thriving open-source project. They ensure that contributions are not just technically sound but also genuinely valuable to the people the project aims to serve. My experience with Jellify underscored how these feedback loops, facilitated by effective communication, lead to a more robust, user-friendly, and collaboratively built application.